This seems exactly the opposite of genius.

Here it is in a nutshell:

“Language models aren’t trained to produce facts. They are trained to produce things that look like facts,” says Dr. Margaret Mitchell, chief ethics scientist at Hugging Face. “If you want to use LLMs to write creative stories or help with language learning—cool. These things don’t rely on declarative facts. Bringing the technology from the realm of make-believe fluent language, where it shines, to the realm of fact-based conversation, is exactly the wrong thing to do.”

The lawsuits will be legendary.

But what would they be suing for, if the tool itself doesn’t guarantee accuracy?

All of the problems seem to stem from the standard of infallibility that everyone seems to hold AI too. But it well-known (especially now with the tech press), that this is not the case.

In the medical field, all results from analysis tools (AI or otherwise), require human expert verification—and you can bet that will be written into Epic Systems’ terms.

Unfortunately the damage will already be done - it’s the old lawyers saw of “you can’t un-ring the bell.”

Once patient safety is compromised is stated, the terms won’t matter to anyone. (I work in healthcare…)

Which is why I’m asking how a lawsuit would proceed.

If the terms state, “You need to verify the results with an expert,” but a healthcare worker blindly trusts the AI anyways, then all the court case would reveal was that there was malpractice.

There is about to be a lawsuit filed by the family of Michael Schumacher over an article that was created by an AI Chatbot. Schumacher was brain injured in a skiing accident in 2013. The article calls itself “deceptively real” and discloses at its end, that it was not a real interview but one created by a chatbot. Nevertheless, the family will be suing to challenge the effectiveness of those disclaimers. The cover of the publication that ran the article promoted it as an interview with Schumacher.

Setting aside the improper use of intellectual property or identity rights, the article itself and its disclaimers is an area that will likely be litigated long after I, Dale and other lawyers on this site have shed our mortal coils.

There is a body of law that provides liability for foreseeable misuse of a product. I could see how that might apply to chatbots or AI as well.

Total side tangent, but you seem to have a knack for explaining the emerging legalities of AI in a very coherent and digestible manner.

Don’t be so hasty to write yourself off this field, you could be the rising star the whole industry needs to sort out the tangle. ![]()

Absolutely. Look at Musk’s veiled threat to MS about ChatGPT’s unauthorized use of his data (cess)pool at Twitter. How can you argue “fair use” when you have eaten the entire planet…

Depends on who’s paying the bill? :vb-agree:

Thank you. That is quite a compliment.

Well deserved one at that. I on the other hand am like trying to take a drink from a firehose - you get soaking wet but it does little for your thirst…

Just came across this article from the NY Law Journal discussing some of the issues related to Chat GPT. The lawyers are taking notice. Why Legal Chiefs Need to Start Developing ChatGPT Compliance Programs | New York Law Journal

Yes, but that would kabosh ‘AI’, or at least greatly limit its uptake as thr very last thing a medical professional wants to face is a malpractice lawsuit. It can be career ending; a career that takes a lot of time and money to cultivate.

Though I could definitely see someone bring a malpractice lawsuit against a medical professional for not using ‘AI’. I reckon that the medical professional would have stronger grounds to defend themselves there though.

For now I’d think they’d be safe with all the direct and anecdotal evidence of the frequency of wrong answers from Sydney.

She ended our conversation about looking at getting a new car today. All I asked was it be a five door!

Looks like the editor who authorized the Chat GPS article I was discussing was fired less than a week after the family threatened legal action. So, there is that. Editor Is Sacked After Publishing Michael Schumacher Fake Interview In German Magazine (msn.com)

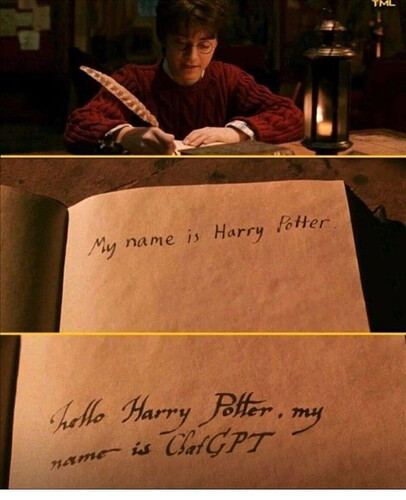

You pick ChatGPT’s name now fellow witches and wizards (I prefer the latter):

He Who Must Not Be Named

or

The Dark Lord

or perhaps more appropriately:

Dark Sidious

- Privacy and Data Security: Medical records contain highly sensitive and confidential information. Integrating ChatGPT Byte with Epic’s medical records would require strict security measures to ensure data privacy and protection from unauthorized access or breaches. Any mishandling or unauthorized disclosure of medical data could have severe consequences, including legal and ethical implications.

- Accuracy and Reliability: ChatGPT Byte, like any AI model, is not infallible. It may generate responses that sound plausible but are factually incorrect or misleading. Relying solely on ChatGPT’s outputs without careful human oversight and validation could result in inaccurate medical advice, misdiagnoses, or inappropriate treatment recommendations, potentially endangering patient health and safety.